个人理解:PVC绑定PV

概念 PersistentVolume(PV)持久卷

是由管理员设置的存储,它是集群的一部分。就像节点是集群中的资源一样,PV也是集群中的资源。PV是Volume之类的卷插件,但是具有独立于使用PV的Pod的生命周期。此API对向包含存储实现的细节,即NFS、ISCSI或特定于云供应商的存储系统

PersistentVolumeClaim(PVC)持久卷声明

是用户存储的请求。他与Pod相似。Pod消耗节点资源,PVC消耗PV资源。Pod可以请求特定级别的资源(CPU和内存)。声明可以请求特定的大小和访问模式(例如,可以以读/写一次或只读多次模式挂载)

静态PV

集群管理员创建一些PV。它们带有可供集群用户使用的实际存储的细节。它们存在于kubernetes API中,可用于消费

动态

当管理员创建的静态PV都不匹配用户的PersistentVolumeClaim时,集群可能会尝试动态的为PVC创建卷。此配置基于StorageClasses:PVC必须请求[存储类],并且管理员必须创建并配置该类才能进行动态创建。生米功能该类为""可以有效地禁用其动态配置

要启用基于存储级别的动态存储配置,集群管理员需要启用API server的DefaultStorageClass[准入控制器]。例如,通过确保DefaultStorageClass位于API server组件的--admission-control标志,使用逗号分隔的有序值列表中,可以完成此操作

绑定

master中的控制环路监视新的PVC,寻找匹配的PV(如果可能),并将它们绑定在一起。如果为新的PVC动态调配PV,则该环路将始终将该PV绑定到PVC。否则,用户总会得到他们所请求的存储,但是容量可能超出要求的数量。一旦PV和PVC绑定后,`PersistentVolumeCalaim·绑定是排他性的,不管它们是如何绑定的。PVC跟PV绑定是一对一的映射。

持久化卷声明的保护 PVC保护的目的是确保由Pod正在使用的PVC不会从系统中移除,因为如果被移除的话可能会导致数据丢失

当启用PVC保护alpha功能时,如果用户删除了一个pod正在使用的PVC,则该PVC不会被立刻删除。PVC的删除将被推迟,直到PVC不再被任何Pod使用。

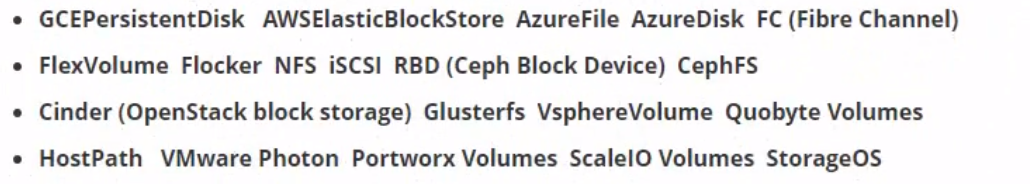

持久化卷类型 PersistentVolume类型以插件形式实现。kubernetes目前支持以下插件类型:

举个栗子 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: v1 kind: PersistentVolume metadata: name: pv-nfs spec: capacity: storage: 5Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Recycle storageClassName: slow mountOptions: - hard - nfsvers=4.1 nfs: path: /nfs server: 192.168 .128 .32

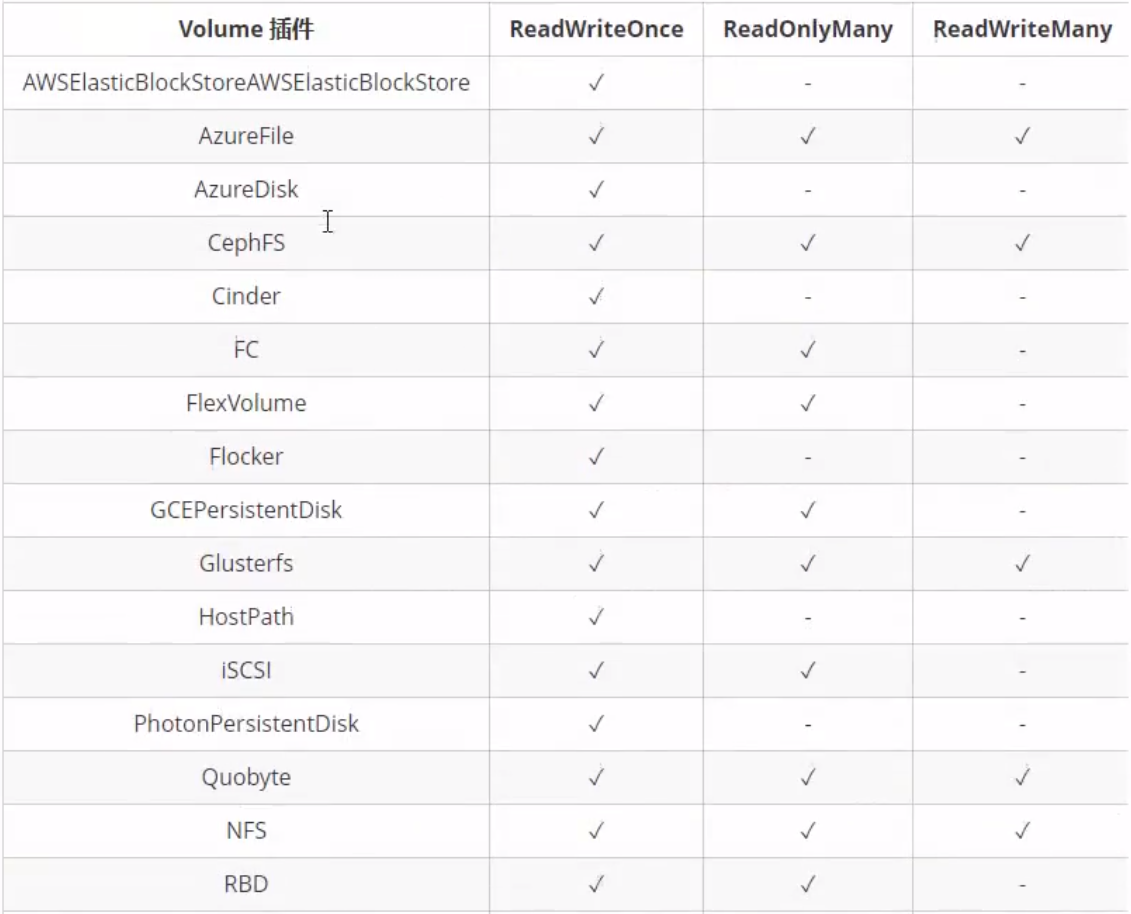

PV访问模式 PersistentVolume可以以资源提供者支持的任何方式挂载到主机上。如下表所示,供应商具有不同的功能,每个PV的访问模式都将被设置为改卷支持的特定模式。例如,NFS可以支持多个读/写客户端,但特定的NFS PV可能以制度方式导出到服务器上。每个PV都有一套自己的用来描述特定功能的访问模式

ReadWriteOnce:该卷可以被单个节点以读/写模式挂载ReadOnlyMany:改卷可以被多个节点以只读模式挂载ReadWriteMany:该卷可以被多个节点以读/写模式挂载

在命令行中,访问模式缩写为:

RWO-ReadWriteOnlyROX-ReadOnlyManyRWX-ReadWriteMany

回收策略

Retain(保留)–手动回收 Recycle(回收)–基本擦除(rm -rf /thevolume/*) Delete(删除)–关联的存储资产(例如AWS EBS、GCE PD、Azure Disk和OpenStack Cinder卷)将被删除

当前,只有NFS和HostPath支持策略。AWS EBS、GCE PD、Azure Disk和Cinder卷支持删除策略

状态 卷可以处于以下的某种状态:

Available(可用)–一块空闲资源还没有被任何声明绑定

Bound(已绑定)–卷已经被声明绑定

Released(已释放)–声明被删除,但是资源还未被集群重新声明

Failed(失败)–该卷的自动回收失败

命令行显示绑定到PV的PVC的名称

持久化演示说明-NFS 1 安装NFS 1 2 3 4 5 6 7 8 yum install -y nfs-common nfs-utils rpcbind mkdir /nfsdata chmod 666 /nfsdata chown nfsnobody /nfsdata cat /etc/exports /nfsdata *(rw,no_root_squash,no_all_squash,sync) systemctl start rpcbind systemctl start nfs

2 部署PV 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 apiVersion: v1 kind: PersisentVolume metadata: name: nfspv1 spec: capacity: storage: 10Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Recycle storageClassName: nfs nfs: path: /nfs server: 192.168 .128 .32 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv2 spec: capacity: storage: 10Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: nfsv2 nfs: path: /zfspool/nfs server: 192.168 .128 .32 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv3 spec: capacity: storage: 10Gi accessModes: - ReadOnlyMany persistentVolumeReclaimPolicy: Retain storageClassName: nfs nfs: path: /zfspool/nfs server: 192.168 .128 .32 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv4 spec: capacity: storage: 10Gi accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain storageClassName: nfs nfs: path: /zfspool/nfs server: 192.168 .128 .32

1 2 3 4 5 6 [root @k8s -master pv ] NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10 Gi RWO Recycle Available nfs 20 h nfspv2 10 Gi RWO Retain Available nfsv2 20 h nfspv3 10 Gi ROX Retain Available nfs 2 m24s nfspv4 10 Gi RWX Retain Available nfs 58 s

3 创建服务并使用PVC 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: selector: app: nginx ports: - port: 80 name: web clusterIP: none --- apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: selector: matchLabels: app: nginx serviceName: "nginx" replicas: 3 template: metadata: labels: app: nginx spec: containers: - name: nginx image: hub.test.com/library/myapp:v1 ports: - containerPort: 80 name: web volumeMounts: - name: www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: www spec: accessModes: ["ReadWriteOnce" ] storageClassName: "nfs" resources: requests: storage: 1Gi

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 [root @k8s -master pv ] service/nginx created statefulset.apps/web created [root @k8s -master pv ] NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96 .0.1 <none> 443 /TCP 7 d nginx ClusterIP 10.102 .85.247 <none> 80 /TCP 9 s [root @k8s -master pv ] NAME READY STATUS RESTARTS AGE pod 1 /1 Running 0 38 h pod-registry -test 1 /1 Running 0 39 h pod-vol 2 /2 Running 231 38 h web-0 1 /1 Running 0 4 s web-1 0 /1 Pending 0 1 s [root @k8s -master pv ] Name: web-1 Namespace: default Priority: 0 Node: <none> Labels: app=nginx controller-revision -hash =web-59c46fbcb8 statefulset.kubernetes.io/pod-name =web-1 Annotations: <none> Status: Pending IP: Controlled By: StatefulSet/web Containers: nginx: Image: hub.test.com/library/myapp:v1 Port: 80 /TCP Host Port: 0 /TCP Environment: <none> Mounts: /usr/share/nginx/html from www (rw) /var/run/secrets/kubernetes.io/serviceaccount from default-token -89jrq (ro) Conditions: Type Status PodScheduled False Volumes: www: Type : PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace) ClaimName: www-web -1 ReadOnly: false default-token -89jrq : Type : Secret (a volume populated by a Secret) SecretName: default-token -89jrq Optional: false QoS Class : BestEffort Node -Selectors : <none >Tolerations : node .kubernetes .io /not -ready :NoExecute for 300s node .kubernetes .io /unreachable :NoExecute for 300s Events : Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 43s (x2 over 43s ) default -scheduler pod has unbound immediate PersistentVolumeClaims (repeated 2 times ) ## 发现没有volume 绑定,查看pv ,发现只有一个pv 符合要求 [root @k8s -master pv ]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Recycle Bound default /www -web -0 nfs 20h nfspv2 10Gi RWO Retain Available nfsv2 20h nfspv3 10Gi ROX Retain Available nfs 11m nfspv4 10Gi RWX Retain Available nfs 9m39s ## 创建多2个PV ,模板使用刚刚的模板 [root @k8s -master pv ]# cat pv3 .yaml apiVersion : v1 kind : PersistentVolume metadata : name : nfspv5 spec : capacity : storage : 10Gi accessModes : - ReadWriteOnce persistentVolumeReclaimPolicy : Retain storageClassName : nfs nfs : path : /zfspool /nfs server : 192.168.128.32 --- apiVersion : v1 kind : PersistentVolume metadata : name : nfspv6 spec : capacity : storage : 10Gi accessModes : - ReadWriteOnce persistentVolumeReclaimPolicy : Retain storageClassName : nfs nfs : path : /zfspool /nfs server : 192.168.128.32 [root @k8s -master pv ]# kubectl create -f pv3 .yaml persistentvolume /nfspv5 created persistentvolume /nfspv6 created [root @k8s -master pv ]# kubectl get pods NAME READY STATUS RESTARTS AGE web -0 1/1 Running 0 9s web -1 1/1 Running 0 7s web -2 1/1 Running 0 5s [root @k8s -master pv ]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Recycle Bound default /www -web -0 nfs 20h nfspv2 10Gi RWO Retain Available nfsv2 20h nfspv3 10Gi ROX Retain Available nfs 34m nfspv4 10Gi RWX Retain Available nfs 33m nfspv5 10Gi RWO Retain Bound default /www -web -2 nfs 21m nfspv6 10Gi RWO Retain Bound default /www -web -1 nfs 21m [root @k8s -master pv ]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE www -web -0 Bound nfspv1 10Gi RWO nfs 57m www -web -1 Bound nfspv6 10Gi RWO nfs 57m www -web -2 Bound nfspv5 10Gi RWO nfs 46m [root @k8s -master pv ]#

查看pod的详细信息,验证能否访问,需要先在nfs上有index.html文件

1 2 3 4 5 6 7 8 [root @k8s -master pv ] NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-0 1 /1 Running 0 4 m23s 10.244 .2.197 k8s-node02 <none> <none> web-1 1 /1 Running 0 4 m21s 10.244 .1.115 k8s-node01 <none> <none> web-2 1 /1 Running 0 4 m19s 10.244 .2.198 k8s-node02 <none> <none> /nfs [root @k8s -master pv ] Tue Feb 4 17 :10 :32 CST 2020

删除web-0的pod,再次查看

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root @k8s -master pv ] pod "web-0" deleted [root @k8s -master pv ] NAME READY STATUS RESTARTS AGE web-0 1 /1 Running 0 3 s web-1 1 /1 Running 0 12 m web-2 1 /1 Running 0 12 m [root @k8s -master pv ] NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-0 1 /1 Running 0 8 s 10.244 .2.199 k8s-node02 <none> <none> web-1 1 /1 Running 0 12 m 10.244 .1.115 k8s-node01 <none> <none> web-2 1 /1 Running 0 12 m 10.244 .2.198 k8s-node02 <none> <none> [root @k8s -master pv ] Tue Feb 4 17 :10 :32 CST 2020

会创建一个相同名称的pod

关于StatefulSet

匹配Pod name(网络标识)的模式为:(statefulSet名称)-(序号),比如上面的示例:web-0,web-1,web-2 StatefulSet为每个Pod副本创建了一个DNS域名,这个域名的格式为:$(podname).(headless server name),也就是意味着服务间是通过Pod域名来通信而非Pod IP,因为当Pod所在节点发生故障时,Pod会被漂移到其他节点上,Pod IP会发生变化,但是Pod与敏感不会有变化 StatefulSet使用Headless服务来控制Pod的域名,这个域名的FQDN为:(servicename).(namespace).svc.cluster.local,其中,”cluster.local”指的是集群的域名 根据volumeClaimTemplates,为每个Pod创建创建一个PVC,PVC的命名规则为:(volumeClaimTemplates.name)-(pod_name),比如上面的volumeMounts.name=www,Pod Name=web-[0-2],因此创建出来的PVC是www-web-0、www-web-1、www-web-2 删除Pod不会删除其PVC,手动删除PVC将自动释放PV

StatefulSet的启停顺序:

有序部署:部署StatefulSet时,如果有多个Pod副本,他们会被顺序的创建(从0到N-1),并且在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态

有序删除:当Pod被删除时,他们被终止的顺序是从N-1到0

有序扩展:当对Pod执行扩展操作时,与部署一样,它前面的Pod必须都处于Running和Ready状态。

StatefulSet使用场景:

稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现。

稳定的网络标识符,即Pod重新调度后其PodName和HostName不变

有序部署,有序扩展,基于init Container来实现

有序收缩

通过DNS访问:示例 新建pod.yaml

1 2 3 4 5 6 7 8 9 10 11 apiVersion: v1 kind: Pod metadata: name: myapp labels: app: myapp version: v1 spec: containers: - name: app image: hub.test.com/library/myapp:v1

创建pod

1 2 3 4 5 6 7 8 [root @k8s -master ~] pod/myapp created [root @k8s -master ~] NAME READY STATUS RESTARTS AGE myapp 1 /1 Running 0 3 s web-0 1 /1 Running 0 40 m web-1 1 /1 Running 0 40 m web-2 1 /1 Running 0 40 m

通过此pod访问web-0

1 2 3 4 5 6 7 8 9 [root @k8s -master ~] / PING web-0 .nginx (10.244 .2.200 ): 56 data bytes 64 bytes from 10.244 .2.200 : seq=0 ttl=64 time=0.114 ms64 bytes from 10.244 .2.200 : seq=1 ttl=64 time=0.085 ms^C --- web-0 .nginx ping statistics --- 2 packets transmitted, 2 packets received, 0 % packet lossround-trip min/avg/max = 0.085 /0.099 /0.114 ms

删除web-0之后,再次访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 / [root @k8s -master ~] pod "web-0" deleted [root @k8s -master ~] NAME READY STATUS RESTARTS AGE myapp 1 /1 Running 0 2 m34s web-0 1 /1 Running 0 3 s web-1 1 /1 Running 0 43 m web-2 1 /1 Running 0 43 m [root @k8s -master ~] / PING web-0 .nginx (10.244 .2.203 ): 56 data bytes 64 bytes from 10.244 .2.203 : seq=0 ttl=64 time=0.085 ms64 bytes from 10.244 .2.203 : seq=1 ttl=64 time=0.113 ms^C --- web-0 .nginx ping statistics --- 2 packets transmitted, 2 packets received, 0 % packet lossround-trip min/avg/max = 0.085 /0.099 /0.113 ms

删除PV与PVC的绑定 查看pod、PV、PVC

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root @k8s -master ~] NAME READY STATUS RESTARTS AGE myapp 1 /1 Running 0 31 m web-0 1 /1 Running 0 29 m web-1 1 /1 Running 0 72 m web-2 1 /1 Running 0 72 m [root @k8s -master ~] NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10 Gi RWO Recycle Bound default/www-web -0 nfs 22 h nfspv2 10 Gi RWO Retain Available nfsv2 22 h nfspv3 10 Gi ROX Retain Available nfs 121 m nfspv4 10 Gi RWX Retain Available nfs 119 m nfspv5 10 Gi RWO Retain Bound default/www-web -2 nfs 107 m nfspv6 10 Gi RWO Retain Bound default/www-web -1 nfs 107 m [root @k8s -master ~] NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE www-web -0 Bound nfspv1 10 Gi RWO nfs 112 m www-web -1 Bound nfspv6 10 Gi RWO nfs 112 m www-web -2 Bound nfspv5 10 Gi RWO nfs 102 m

删除StatefulSet

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root @k8s -master pv ] service "nginx" deleted statefulset.apps "web" deleted [root @k8s -master pv ] NAME READY STATUS RESTARTS AGE myapp 1 /1 Running 0 32 m web-2 0 /1 Terminating 0 73 m [root @k8s -master pv ] No resources found. [root @k8s -master pv ] NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10 Gi RWO Recycle Bound default/www-web -0 nfs 22 h nfspv2 10 Gi RWO Retain Available nfsv2 22 h nfspv3 10 Gi ROX Retain Available nfs 122 m nfspv4 10 Gi RWX Retain Available nfs 121 m nfspv5 10 Gi RWO Retain Bound default/www-web -2 nfs 108 m nfspv6 10 Gi RWO Retain Bound default/www-web -1 nfs 108 m [root @k8s -master pv ] NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE www-web -0 Bound nfspv1 10 Gi RWO nfs 114 m www-web -1 Bound nfspv6 10 Gi RWO nfs 114 m www-web -2 Bound nfspv5 10 Gi RWO nfs 103 m

删除PVC

1 2 3 4 5 6 [root @k8s -master pv ] persistentvolumeclaim "www-web-0" deleted persistentvolumeclaim "www-web-1" deleted persistentvolumeclaim "www-web-2" deleted [root @k8s -master pv ] No resources found.

查看pv,发现pv还是绑定状态

1 2 3 4 5 6 7 8 9 [root@k8s-master pv]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Recycle Released default/www-web-0 nfs 22h nfspv2 10Gi RWO Retain Available nfsv2 22h nfspv3 10Gi ROX Retain Available nfs 129m nfspv4 10Gi RWX Retain Available nfs 127m nfspv5 10Gi RWO Retain Released default/www-web-2 nfs 115m nfspv6 10Gi RWO Retain Released default/www-web-1 nfs 115m

查看pv详细信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 [root @k8s -master pv ] apiVersion: v1 kind: PersistentVolume metadata: annotations: pv.kubernetes.io/bound-by -controller : "yes" creationTimestamp: "2020-02-03T12:27:09Z" finalizers: - kubernetes.io/pv-protection name: nfspv1 resourceVersion: "931314" selfLink: /api/v1/persistentvolumes/nfspv1 uid: 96885487 -396e -432a -bbc7 -4a175f4d008a spec: accessModes: - ReadWriteOnce capacity: storage: 10 Gi claimRef: apiVersion: v1 kind: PersistentVolumeClaim name: www-web -0 namespace: default resourceVersion: "931311" uid: 4285 e38c-9dd9 -49f3 -9737 -dac799397c13 nfs: path: /zfspool/nfs server: 192.168 .128.32 persistentVolumeReclaimPolicy: Recycle storageClassName: nfs volumeMode: Filesystem status: phase: Bound

发现该pv的spec.claimRef有绑定信息,使用kubectl edit pv nfspv1将其删除

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 [root@k8s-master pv ] apiVersion: v1 kind: PersistentVolume metadata: annotations: pv.kubernetes.io/bound-by-controller: "yes" creationTimestamp: "2020-02-03T12:27:09Z" finalizers: - kubernetes.io/pv-protection name: nfspv1 resourceVersion: "931314" selfLink: /api/v1/persistentvolumes/nfspv1 uid: 96885487 -396e-432a-bbc7-4a175f4d008a spec: accessModes: - ReadWriteOnce capacity: storage: 10Gi nfs: path: /zfspool/nfs server: 192.168 .128 .32 persistentVolumeReclaimPolicy: Recycle storageClassName: nfs volumeMode: Filesystem status: phase: Bound

查看pv,nfspv1的状态已经编程Available

1 2 3 4 5 6 7 8 [root @k8s -master pv ] NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10 Gi RWO Recycle Available nfs 22 h nfspv2 10 Gi RWO Retain Available nfsv2 22 h nfspv3 10 Gi ROX Retain Available nfs 129 m nfspv4 10 Gi RWX Retain Available nfs 128 m nfspv5 10 Gi RWO Retain Released default/www-web -2 nfs 115 m nfspv6 10 Gi RWO Retain Released default/www-web -1 nfs 115 m

其他 1 emptyDir和hostPath类型的Volume很方便,但可持久性不强,k8s支持多种外部存储的volume

2 PV和PVC是分离了管理员和普通用户的职责,更适合生产环境

3 通过StorageClass实现更高效的动态供给